SEVEN BASIC TOOLS OF QUALITY

From Wikipedia, the free encyclopedia

The Seven Basic Tools of Quality is a designation given to a fixed set of graphical techniques identified as being most helpful in troubleshooting issues related to quality.[1] They are called basic because they are suitable for people with little formal training in statistics and because they can be used to solve the vast majority of quality-related issues.[2]

- Cause-and-effect diagram (also known as the "fishbone" or Ishikawa diagram)

- Check sheet

- Control chart

- Histogram

- Pareto chart

- Scatter diagram

- Stratification (alternately, flow chart or run chart)

The designation arose in postwar Japan, inspired by the seven famous weapons of Benkei.[6] It was possibly introduced by Kaoru Ishikawa who in turn was influenced by a series of lectures W. Edwards Deming had given to Japanese engineers and scientists in 1950.[7] At that time, companies that had set about training their workforces in statistical quality control found that the complexity of the subject intimidated the vast majority of their workers and scaled back training to focus primarily on simpler methods which suffice for most quality-related issues.[8]

The Seven Basic Tools stand in contrast to more advanced statistical methods such as survey sampling, acceptance sampling, statistical hypothesis testing, design of experiments, multivariate analysis, and various methods developed in the field of operations research.[9]

ISHIKAWA DIAGRAM

Ishikawa diagrams (also called fishbone diagrams, herringbone diagrams, cause-and-effect diagrams, or Fishikawa) arecausal diagrams created by Kaoru Ishikawa (1968) that show the causes of a specific event.[1][2] Common uses of the Ishikawa diagram are product design and quality defect prevention, to identify potential factors causing an overall effect. Each cause or reason for imperfection is a source of variation. Causes are usually grouped into major categories to identify these sources of variation. The categories typically include:

- People: Anyone involved with the process

- Methods: How the process is performed and the specific requirements for doing it, such as policies, procedures, rules, regulations and laws

- Machines: Any equipment, computers, tools, etc. required to accomplish the job

- Materials: Raw materials, parts, pens, paper, etc. used to produce the final product

- Measurements: Data generated from the process that are used to evaluate its quality

- Environment: The conditions, such as location, time, temperature, and culture in which the process operates

Ishikawa diagrams were popularized by Kaoru Ishikawa[3] in the 1960s, who pioneered quality management processes in theKawasaki shipyards, and in the process became one of the founding fathers of modern management.

The basic concept was first used in the 1920s, and is considered one of the seven basic tools of quality control.[4] It is known as a fishbone diagram because of its shape, similar to the side view of a fish skeleton.

Mazda Motors famously used an Ishikawa diagram in the development of the Miata sports car, where the required result was "Jinba Ittai" (Horse and Rider as One — jap. 人馬一体). The main causes included such aspects as "touch" and "braking" with the lesser causes including highly granular factors such as "50/50 weight distribution" and "able to rest elbow on top of driver's door". Every factor identified in the diagram was included in the final design.

CHECK SHEET

The check sheet is a form (document) used to collect data in real time at the location where the data is generated. The data it captures can be quantitative or qualitative. When the information is quantitative, the check sheet is sometimes called a tally sheet.[1]

The check sheet is one of the so-called Seven Basic Tools of Quality Control.[2]

The defining characteristic of a check sheet is that data are recorded by making marks ("checks") on it. A typical check sheet is divided into regions, and marks made in different regions have different significance. Data are read by observing the location and number of marks on the sheet.

Check sheets typically employ a heading that answers the Five Ws:

- Who filled out the check sheet

- What was collected (what each check represents, an identifying batch or lot number)

- Where the collection took place (facility, room, apparatus)

- When the collection took place (hour, shift, day of the week)

- Why the data were collected

Function

Kaoru Ishikawa identified five uses for check sheets in quality control:[3]

- To check the shape of the probability distribution of a process

- To quantify defects by type

- To quantify defects by location

- To quantify defects by cause (machine, worker)

- To keep track of the completion of steps in a multistep procedure (in other words, as a checklist)

Check sheet to assess the shape of a process's probability distribution

See also: Process capability

This type of check sheet consists of the following:When assessing the probability distribution of a process one can record all process data and then wait to construct a frequency distribution at a later time. However, a check sheet can be used to construct the frequency distribution as the process is being observed.[4]

- A grid that captures

- The histogram bins in one dimension

- The count or frequency of process observations in the corresponding bin in the other dimension

- Lines that delineate the upper and lower specification limits

Note that the extremes in process observations must be accurately predicted in advance of constructing the check sheet.

When the process distribution is ready to be assessed, the assessor fills out the check sheet's heading and actively observes the process. Each time the process generates an output, he or she measures (or otherwise assesses) the output, determines the bin in which the measurement falls, and adds to that bin's check marks.

When the observation period has concluded, the assessor should examine it as follows:[5]

- Do the check marks form a bell curve? Are values skewed? Is there more than one peak? Are there outliers?

- Do the check marks fall completely within the specification limits with room to spare? Or are there a significant number of check marks that fall outside the specification limits?

If there is evidence of non-normality or if the process is producing significant output near or beyond the specification limits, a process improvement effort to remove special-cause variation should be undertaken.

Check sheet for defect type

See also: Pareto analysis

This type of check sheet consists of the following:When a process has been identified as a candidate for improvement, it's important to know what types of defects occur in its outputs and their relative frequencies. This information serves as a guide for investigating and removing the sources of defects, starting with the most frequently occurring.[6]

- A single column listing each defect category

- One or more columns in which the observations for different machines, materials, methods, operators are to be recorded

Note that the defect categories and how process outputs are to be placed into these categories must be agreed to and spelled out in advance of constructing the check sheet. Additionally, rules for recording the presence of defects of different types when observed for the same process output must be set down.

When the process distribution is ready to be assessed, the assessor fills out the check sheet's heading and actively observes the process. Each time the process generates an output, he or she assesses the output for defects using the agreed-upon methods, determines the category in which the defect falls, and adds to that category's check marks. If no defects are found for a process output, no check mark is made.

When the observation period has concluded, the assessor should generate a Pareto chart from the resulting data. This chart then determines the order in which the process is to be investigated and sources of variation that lead to defects removed.

Check sheet for defect location

Main article: Defect concentration diagram

When process outputs are objects for which defects may be observed in varying locations (for example bubbles in laminated products or voids in castings), a defect concentration diagram is invaluable.[7] Note that while most check sheet types aggregate observations from many process outputs, typically one defect location check sheet is used per process output.

This type of check sheet consists of the following:

- A to-scale diagram of the object from each of its sides, optionally partitioned into equally-sized sections

When the process distribution is ready to be assessed, the assessor fills out the check sheet's heading and actively observes the process. Each time the process generates an output, he or she assesses the output for defects and marks the section of each view where each is found. If no defects are found for a process output, no check mark is made.

When the observation period has concluded, the assessor should reexamine each check sheet and form a composite of the defect locations. Using his or her knowledge of the process in conjunction with the locations should reveal the source or sources of variation that produce the defects.

Check sheet for defect cause

When a process has been identified as a candidate for improvement, effort may be required to try to identify the source of the defects by cause.[8]

This type of check sheet consists of the following:

- One or more columns listing each suspected cause (for example machine, material, method, environment, operator)

- One or more columns listing the period during which process outputs are to be observed (for example hour, shift, day)

- One or more symbols to represent the different types of defects to be recorded—these symbols take the place of the check marks of the other types of charts.

Note that the defect categories and how process outputs are to be placed into these categories must be agreed to and spelled out in advance of constructing the check sheet. Additionally, rules for recording the presence of defects of different types when observed for the same process output must be set down.

When the process distribution is ready to be assessed, the assessor fills out the check sheet's heading. For each combination of suspected causes, the assessor actively observes the process. Each time the process generates an output, he or she assesses the output for defects using the agreed-upon methods, determines the category in which the defect falls, and adds the symbol corresponding to that defect category to the cell in the grid corresponding to the combination of suspected causes. If no defects are found for a process output, no symbol is entered.

When the observation period has concluded, the combinations of suspect causes with the most symbols should be investigated for the sources of variation that produce the defects of the type noted.

Optionally, the cause-and-effect diagram may be used to provide a similar diagnostic. The assessor simply places a check mark next to the "twig" on the branch of the diagram corresponding to the suspected cause when he or she observes a defect.

Checklist

Main article: Checklis

This type of check sheet consists of the following:While the check sheets discussed above are all for capturing and categorizing observations, the checklist is intended as a mistake-proofing aid when carrying out multi-step procedures, particularly during the checking and finishing of process outputs.

- An (optionally numbered) outline of the subtasks to be performed

- Boxes or spaces in which check marks may be entered to indicate when the subtask has been completed

Notations should be made in the order that the subtasks are actually completed.[9]

CONTROL CHART

Control charts, also known as Shewhart charts (after Walter A. Shewhart) or process-behavior charts, in statistical process control are tools used to determine if a manufacturing or business process is in a state of statistical control.

If analysis of the control chart indicates that the process is currently under control (i.e., is stable, with variation only coming from sources common to the process), then no corrections or changes to process control parameters are needed or desired. In addition, data from the process can be used to predict the future performance of the process. If the chart indicates that the monitored process is not in control, analysis of the chart can help determine the sources of variation, as this will result in degraded process performance.[1] A process that is stable but operating outside of desired (specification) limits (e.g., scrap rates may be in statistical control but above desired limits) needs to be improved through a deliberate effort to understand the causes of current performance and fundamentally improve the process.[2]

The control chart is one of the seven basic tools of quality control.[3] Typically control charts are used for time-series data, though they can be used for data that have logical comparability (i.e. you want to compare samples that were taken all at the same time, or the performance of different individuals), however the type of chart used to do this requires consideration.[4]

History

The control chart was invented by Walter A. Shewhart while working for Bell Labs in the 1920s.[citation needed] The company's engineers had been seeking to improve the reliability of their telephony transmission systems. Because amplifiers and other equipment had to be buried underground, there was a business need to reduce the frequency of failures and repairs. By 1920, the engineers had already realized the importance of reducing variation in a manufacturing process. Moreover, they had realized that continual process-adjustment in reaction to non-conformance actually increased variation and degraded quality. Shewhart framed the problem in terms of Common- and special-causes of variation and, on May 16, 1924, wrote an internal memo introducing the control chart as a tool for distinguishing between the two. Shewhart's boss, George Edwards, recalled: "Dr. Shewhart prepared a little memorandum only about a page in length. About a third of that page was given over to a simple diagram which we would all recognize today as a schematic control chart. That diagram, and the short text which preceded and followed it set forth all of the essential principles and considerations which are involved in what we know today as process quality control."[5] Shewhart stressed that bringing a production process into a state of statistical control, where there is only common-cause variation, and keeping it in control, is necessary to predict future output and to manage a process economically.

Shewhart created the basis for the control chart and the concept of a state of statistical control by carefully designed experiments. While Shewhart drew from pure mathematical statistical theories, he understood data from physical processes typically produce a "normal distribution curve" (a Gaussian distribution, also commonly referred to as a "bell curve"). He discovered that observed variation in manufacturing data did not always behave the same way as data in nature (Brownian motion of particles). Shewhart concluded that while every process displays variation, some processes display controlled variation that is natural to the process, while others display uncontrolled variation that is not present in the process causal system at all times.[6]

In 1924 or 1925, Shewhart's innovation came to the attention of W. Edwards Deming, then working at the Hawthorne facility. Deming later worked at the United States Department of Agriculture and became the mathematical advisor to the United States Census Bureau. Over the next half a century, Deming became the foremost champion and proponent of Shewhart's work. After the defeat of Japan at the close of World War II, Deming served as statistical consultant to the Supreme Commander for the Allied Powers. His ensuing involvement in Japanese life, and long career as an industrial consultant there, spread Shewhart's thinking, and the use of the control chart, widely in Japanese manufacturing industry throughout the 1950s and 1960s.

Chart details

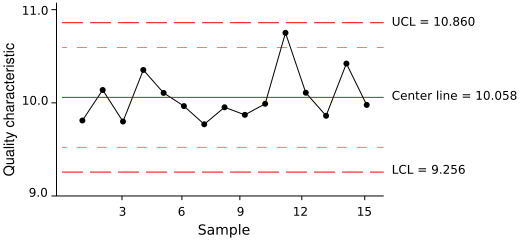

A control chart consists of:

- Points representing a statistic (e.g., a mean, range, proportion) of measurements of a quality characteristic in samples taken from the process at different times [the data]

- The mean of this statistic using all the samples is calculated (e.g., the mean of the means, mean of the ranges, mean of the proportions)

- A centre line is drawn at the value of the mean of the statistic

- The standard error (e.g., standard deviation/sqrt(n) for the mean) of the statistic is also calculated using all the samples

- Upper and lower control limits (sometimes called "natural process limits") that indicate the threshold at which the process output is considered statistically 'unlikely' and are drawn typically at 3 standard errors from the centre line

The chart may have other optional features, including:

- Upper and lower warning or control limits, drawn as separate lines, typically two standard errors above and below the centre line

- Division into zones, with the addition of rules governing frequencies of observations in each zone

- Annotation with events of interest, as determined by the Quality Engineer in charge of the process's quality

Chart usage

If the process is in control (and the process statistic is normal), 99.7300% of all the points will fall between the control limits. Any observations outside the limits, or systematic patterns within, suggest the introduction of a new (and likely unanticipated) source of variation, known as a special-cause variation. Since increased variation means increasedquality costs, a control chart "signaling" the presence of a special-cause requires immediate investigation.

This makes the control limits very important decision aids. The control limits provide information about the process behavior and have no intrinsic relationship to any specificationtargets or engineering tolerance. In practice, the process mean (and hence the centre line) may not coincide with the specified value (or target) of the quality characteristic because the process' design simply cannot deliver the process characteristic at the desired level.

Control charts limit specification limits or targets because of the tendency of those involved with the process (e.g., machine operators) to focus on performing to specification when in fact the least-cost course of action is to keep process variation as low as possible. Attempting to make a process whose natural centre is not the same as the target perform to target specification increases process variability and increases costs significantly and is the cause of much inefficiency in operations. Process capability studies do examine the relationship between the natural process limits (the control limits) and specifications, however.

The purpose of control charts is to allow simple detection of events that are indicative of actual process change. This simple decision can be difficult where the process characteristic is continuously varying; the control chart provides statistically objective criteria of change. When change is detected and considered good its cause should be identified and possibly become the new way of working, where the change is bad then its cause should be identified and eliminated.

The purpose in adding warning limits or subdividing the control chart into zones is to provide early notification if something is amiss. Instead of immediately launching a process improvement effort to determine whether special causes are present, the Quality Engineer may temporarily increase the rate at which samples are taken from the process output until it's clear that the process is truly in control. Note that with three-sigma limits, common-cause variations result in signals less than once out of every twenty-two points for skewed processes and about once out of every three hundred seventy (1/370.4) points for normally distributed processes.[7] The two-sigma warning levels will be reached about once for every twenty-two (1/21.98) plotted points in normally distributed data. (For example, the means of sufficiently large samples drawn from practically any underlying distribution whose variance exists are normally distributed, according to the Central Limit Theorem.)

HISTOGRAM

In statistics, a histogram is a graphical representation of the distribution of data. It is an estimate of the probability distribution of acontinuous variable and was first introduced by Karl Pearson.[1] A histogram is a representation of tabulated frequencies, shown as adjacent rectangles, erected over discrete intervals (bins), with an area equal to the frequency of the observations in the interval. The height of a rectangle is also equal to the frequency density of the interval, i.e., the frequency divided by the width of the interval. The total area of the histogram is equal to the number of data. A histogram may also be normalized displaying relative frequencies. It then shows the proportion of cases that fall into each of several categories, with the total area equaling 1. The categories are usually specified as consecutive, non-overlapping intervals of a variable. The categories (intervals) must be adjacent, and often are chosen to be of the same size.[2] The rectangles of a histogram are drawn so that they touch each other to indicate that the original variable is continuous.[3]

Histograms are used to plot the density of data, and often for density estimation: estimating the probability density function of the underlying variable. The total area of a histogram used for probability density is always normalized to 1. If the length of the intervals on the x-axis are all 1, then a histogram is identical to a relative frequency plot.

An alternative to the histogram is kernel density estimation, which uses a kernel to smooth samples. This will construct a smoothprobability density function, which will in general more accurately reflect the underlying variable.

The histogram is one of the seven basic tools of quality control.[4]

PARETO CHART

A Pareto chart, named after Vilfredo Pareto, is a type of chart that contains both bars and a line graph, where individual values are represented in descending order by bars, and the cumulative total is represented by the line.

The left vertical axis is the frequency of occurrence, but it can alternatively represent cost or another important unit of measure. The right vertical axis is the cumulative percentage of the total number of occurrences, total cost, or total of the particular unit of measure. Because the reasons are in decreasing order, the cumulative function is a concave function. To take the example above, in order to lower the amount of late arriving by 78%, it is sufficient to solve the first three issues.

The purpose of the Pareto chart is to highlight the most important among a (typically large) set of factors. In quality control, it often represents the most common sources of defects, the highest occurring type of defect, or the most frequent reasons for customer complaints, and so on. Wilkinson (2006) devised an algorithm for producing statistically based acceptance limits (similar to confidence intervals) for each bar in the Pareto chart.

These charts can be generated by simple spreadsheet programs, such as OpenOffice.org Calc and Microsoft Excel and specialized statistical software tools as well as online quality charts generators.

The Pareto chart is one of the seven basic tools of quality control.[1]

SCATTER PLOT

A scatter plot, scatterplot, or scattergraph is a type of mathematical diagram using Cartesian coordinates to display values for two variables for a set of data.

The data is displayed as a collection of points, each having the value of one variable determining the position on the horizontal axis and the value of the other variable determining the position on the vertical axis.[2] This kind of plot is also called a scatter chart,scattergram, scatter diagram,[3] or scatter graph.

A scatter plot is used when a variable exists that is below the control of the experimenter. If a parameter exists that is systematically incremented and/or decremented by the other, it is called the control parameter or independent variable and is customarily plotted along the horizontal axis. The measured or dependent variable is customarily plotted along the vertical axis. If no dependent variable exists, either type of variable can be plotted on either axis and a scatter plot will illustrate only the degree ofcorrelation (not causation) between two variables.

A scatter plot can suggest various kinds of correlations between variables with a certain confidence interval. For example, weight and height, weight would be on x axis and height would be on the y axis. Correlations may be positive (rising), negative (falling), or null (uncorrelated). If the pattern of dots slopes from lower left to upper right, it suggests a positive correlation between the variables being studied. If the pattern of dots slopes from upper left to lower right, it suggests a negative correlation. A line of best fit(alternatively called 'trendline') can be drawn in order to study the correlation between the variables. An equation for the correlation between the variables can be determined by established best-fit procedures. For a linear correlation, the best-fit procedure is known as linear regression and is guaranteed to generate a correct solution in a finite time. No universal best-fit procedure is guaranteed to generate a correct solution for arbitrary relationships. A scatter plot is also very useful when we wish to see how two comparable data sets agree with each other. In this case, an identity line, i.e., a y=x line, or an 1:1 line, is often drawn as a reference. The more the two data sets agree, the more the scatters tend to concentrate in the vicinity of the identity line; if the two data sets are numerically identical, the scatters fall on the identity line exactly.

One of the most powerful aspects of a scatter plot, however, is its ability to show nonlinear relationships between variables. Furthermore, if the data is represented by a mixture model of simple relationships, these relationships will be visually evident as superimposed patterns.

Example

For example, to display values for "lung capacity" (first variable) and how long that person could hold his breath, a researcher would choose a group of people to study, then measure each one's lung capacity (first variable) and how long that person could hold his breath (second variable). The researcher would then plot the data in a scatter plot, assigning "lung capacity" to the horizontal axis, and "time holding breath" to the vertical axis.

A person with a lung capacity of 400 ml who held his breath for 21.7 seconds would be represented by a single dot on the scatter plot at the point (400, 21.7) in the Cartesian coordinates. The scatter plot of all the people in the study would enable the researcher to obtain a visual comparison of the two variables in the data set, and will help to determine what kind of relationship there might be between the two variables.

STRATIFIED SAMPLING

In statistical surveys, when subpopulations within an overall population vary, it is advantageous to sample each subpopulation (stratum) independently. Stratification is the process of dividing members of the population into homogeneous subgroups before sampling. The strata should be mutually exclusive: every element in the population must be assigned to only one stratum. The strata should also be collectively exhaustive: no population element can be excluded. Then simple random sampling or systematic sampling is applied within each stratum. This often improves the representativeness of the sample by reducing sampling error. It can produce a weighted mean that has less variability than the arithmetic mean of a simple random sample of the population.

In computational statistics, stratified sampling is a method of variance reduction when Monte Carlo methods are used to estimate population statistics from a known population.

Stratified sampling strategies

- Proportionate allocation uses a sampling fraction in each of the strata that is proportional to that of the total population. For instance, if the population X consists of m in the male stratum and f in the female stratum (where m + f = X), then the relative size of the two samples (x1 = m/K males, x2 = f/K females) should reflect this proportion.

- Optimum allocation (or Disproportionate allocation) - Each stratum is proportionate to the standard deviation of the distribution of the variable. Larger samples are taken in the strata with the greatest variability to generate the least possible sampling variance.

Stratified sampling ensures that at least one observation is picked from each of the strata, even if probability of it being selected is far less than 1. Hence the statistical properties of the population may not be preserved if there are thin strata. A rule of thumb that is used to ensure this is that the population should consist of no more than six strata, but depending on special cases the rule can change - for example if there are 100 strata each with 1 million observations, it is perfectly fine to do a 10% stratified sampling on them.

A real-world example of using stratified sampling would be for a political survey. If the respondents needed to reflect the diversity of the population, the researcher would specifically seek to include participants of various minority groups such as race or religion, based on their proportionality to the total population as mentioned above. A stratified survey could thus claim to be more representative of the population than a survey of simple random sampling or systematic sampling.

Similarly, if population density varies greatly within a region, stratified sampling will ensure that estimates can be made with equal accuracy in different parts of the region, and that comparisons of sub-regions can be made with equal statistical power. For example, in Ontario a survey taken throughout the province might use a larger sampling fraction in the less populated north, since the disparity in population between north and south is so great that a sampling fraction based on the provincial sample as a whole might result in the collection of only a handful of data from the north.

Randomized stratification can also be used to improve population representativeness in a study.

Advantages

If the population is large and enough resources are available, usually one will use multi-stage sampling. In such situations, usually stratified sampling will be done at some stages. However the main advantage remains stratified sampling being the most representative of a population.

Disadvantages

Stratified sampling is not useful when the population cannot be exhaustively partitioned into disjoint subgroups. It would be a misapplication of the technique to make subgroups' sample sizes proportional to the amount of data available from the subgroups, rather than scaling sample sizes to subgroup sizes (or to their variances, if known to vary significantly e.g. by means of an F Test). Data representing each subgroup are taken to be of equal importance if suspected variation among them warrants stratified sampling. If subgroups' variances differ significantly and the data need to be stratified by variance, then there is no way to make the subgroup sample sizes proportional (at the same time) to the subgroups' sizes within the total population. For an efficient way to partition sampling resources among groups that vary in their means, their variances, and their costs, see"optimum allocation".

Practical example

In general the size of the sample in each stratum is taken in proportion to the size of the stratum. This is called proportional allocation. Suppose that in a company there are the following staff:[1]

- male, full-time: 90

- male, part-time: 18

- female, full-time: 9

- female, part-time: 63

- Total: 180

and we are asked to take a sample of 40 staff, stratified according to the above categories.

The first step is to find the total number of staff (180) and calculate the percentage in each group.

- % male, full-time = 90 / 180 = 50%

- % male, part-time = 18 / 180 = 10%

- % female, full-time = 9 / 180 = 5%

- % female, part-time = 63 / 180 = 35%

This tells us that of our sample of 40,

- 50% should be male, full-time.

- 10% should be male, part-time.

- 5% should be female, full-time.

- 35% should be female, part-time.

- 50% of 40 is 20.

- 10% of 40 is 4.

- 5% of 40 is 2.

- 35% of 40 is 14.

Another easy way without having to calculate the percentage is to multiply each group size by the sample size and divide by the total population size (size of entire staff):

- male, full-time = 90 x (40 / 180) = 20

- male, part-time = 18 x (40 / 180) = 4

- female, full-time = 9 x (40 / 180) = 2

- female, part-time = 63 x (40 / 180) = 14

Appreciate your post. Happy to inform the success of Online Handbook of Industrial Engineering - 2019 Version - Narayana Rao

ReplyDelete